Creating a Snowflake Cortex RAG System in Minutes with RLAMA

One of Rlama-pro's most powerful features is its ability to create Retrieval-Augmented Generation (RAG) systems powered by Snowflake Cortex Search. In this guide, we'll walk through using RLAMA's interactive wizard to set up a complete Cortex RAG system in just a few minutes—without writing a single line of code. For a visual demonstration, check out the demo here.

The RLAMA Snowflake Wizard

RLAMA's Snowflake wizard automates the entire process of creating a RAG system with Snowflake Cortex. It guides you through:

- Setting up a Snowflake stage for document storage

- Uploading and processing documents

- Creating the necessary database structures

- Configuring Cortex Search Service

- Setting up the RAG with your choice of inference model

Let's walk through the process step by step.

Step-by-Step Setup Process

1. Create a Snowflake Profile

First, you need to set up a Snowflake profile with your account credentials:

rlama snowflake setup my-snowflake --account=myaccount --user=myuser --warehouse=compute_whThis command creates a profile that securely stores your Snowflake connection details.

Usage:

Parameters:

profile-name: Name to identify your Snowflake profile

Options:

--account: Your Snowflake account identifier--user: Username for Snowflake authentication--warehouse: Default warehouse to use

Once executed, RLAMA will prompt you for your password and store your credentials securely for future commands.

2. Launch the Wizard

Next, launch the interactive wizard:

rlama snowflake wizard my-snowflakeThis command starts the wizard, guiding you through the entire process:

🧙 Welcome to the RLAMA Snowflake Cortex Wizard! 🧙

Snowflake profile: my-snowflake

Step 1: Select database and schema

Enter database name: RLAMA_AI

Enter schema name [PUBLIC]:

3. Create Text Chunker Function

The wizard will help you create a text chunker function in Snowflake:

Step 2: Create text chunker function

Create chunker function? (y/n) [y]:

Enter chunk size [1000]:

Enter chunk overlap [200]:

This creates a Python UDF in Snowflake based on LangChain's RecursiveCharacterTextSplitter that will efficiently chunk your documents.

4. Set Up Document Storage

Next, the wizard helps you create a stage for document storage:

Step 3: Create storage stage

Enter stage name [rlama_docs]: rlama_demo

Once created, you'll have options for uploading documents:

Step 4: Upload documents to stage

Choose how you want to upload documents:

1. I'll upload them manually (using SnowSQL or Web UI)

2. Upload documents from my local folder

Enter your choice (1/2) [1]:

If you choose manual upload, the wizard provides detailed instructions:

Please upload your documents to stage @rlama_demo using one of these methods:

1. Using SnowSQL CLI:

PUT file:///path/to/local/files/*.pdf @RLAMA_AI.PUBLIC.rlama_demo;

2. Using Snowflake Web UI:

Navigate to RLAMA_AI.PUBLIC database and schema, then use the 'Stages' tab to upload files to @rlama_demo

5. Create the Documents Table

The wizard generates a SQL script to create and populate a table for your documents:

Step 5: Create documents table

Enter table name [DOCUMENTS]: TB_AI_RAG

The generated SQL will:

- Create a table for document storage

- Extract text from your files using Snowflake's PARSE_DOCUMENT function

- Apply chunking to prepare your data for embedding

You can choose to execute this SQL automatically or copy it to run manually.

6. Create the Cortex Search Service

After your documents are processed, the wizard helps you set up a Cortex Search Service:

Step 6: Create Cortex Search Service

Enter service name: rlama_docs_ai

Enter warehouse name [COMPUTE_WH]:

Executing: rlama snowflake create-service my-snowflake rlama_docs_ai --database=RLAMA_AI --schema=PUBLIC --warehouse=COMPUTE_WH --table=TB_AI_RAG --columns=CHUNK,TITLE

The wizard executes the necessary commands to create the service with optimal settings.

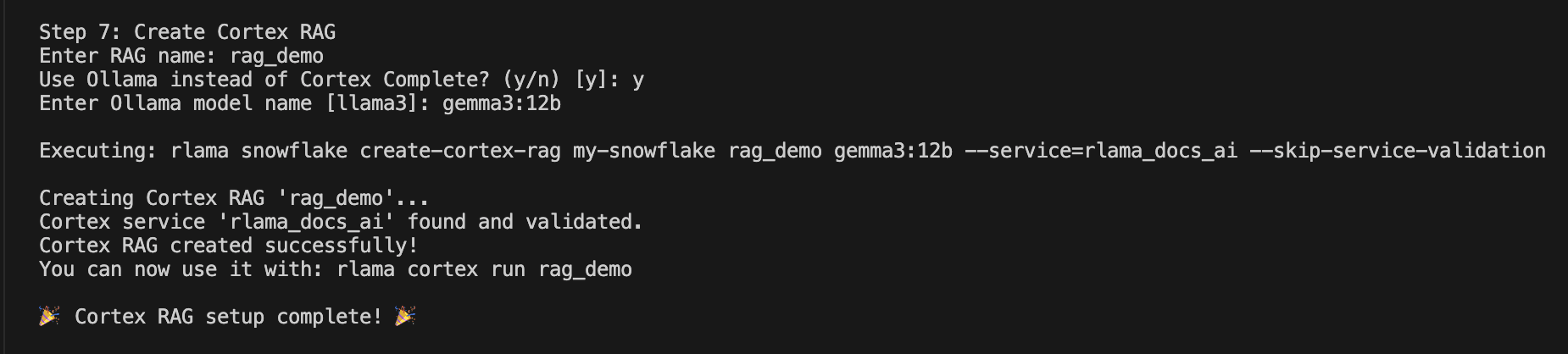

7. Create the Cortex RAG System

Finally, the wizard helps you create the actual RAG system:

Step 7: Create Cortex RAG

Enter RAG name: rag_demo

Use Ollama instead of Cortex Complete? (y/n) [y]:

Enter Ollama model name [llama3]: gemma3:12b

Executing: rlama snowflake create-cortex-rag my-snowflake rag_demo gemma3:12b --service=rlama_docs_ai --skip-service-validation

You can choose between:

- Using Ollama for local inference (more cost-effective)

- Using Cortex Complete for cloud-based inference (higher quality, higher cost)

The wizard completes the process:

Creating Cortex RAG 'rag_demo'...

Cortex service 'rlama_docs_ai' found and validated.

Cortex RAG created successfully!

You can now use it with: rlama cortex run rag_demo

🎉 Cortex RAG setup complete! 🎉

Using Your Cortex RAG

Once setup is complete, you can immediately start using your RAG system:

rlama cortex run rag_demoThis launches an interactive query interface:

❄️ SNOWFLAKE CORTEX RAG ASSISTANT ❄️

• RAG: rag_demo

• Service: rlama_docs_ai

• Engine: Ollama (gemma3:12b)

• Search Limit: 10 results

Enter your questions (type 'exit' to quit):

> how can I create a RAG with RLAMA?

The system will:

- Search your documents using Cortex Search Service

- Generate a contextualized response using your chosen model

- Provide a complete answer based on your documents

Cost Optimization with the Hybrid Approach

One of the key advantages of RLAMA's Snowflake integration is the ability to use a hybrid approach:

- Use Snowflake Cortex Search for vector search and embedding

- Use local Ollama models for inference

This approach can significantly reduce costs compared to using Cortex Complete for inference, while still leveraging Snowflake's powerful search capabilities.

For a typical 1GB dataset with 10,000 monthly queries:

- Snowflake Cortex Search costs:

6.3 credits/month ($19-25) - Inference options:

- Claude 3.5 via Cortex Complete:

25.5 additional credits/month ($76-102) - Mixtral 8x7B via Cortex Complete:

2.2 additional credits/month ($6.6-8.8) - Local Ollama inference: $0 additional Snowflake costs

- Claude 3.5 via Cortex Complete:

By choosing local inference with Ollama, you can reduce your Snowflake costs by up to 80% compared to using Claude 3.5, making enterprise-grade RAG accessible even with limited budgets.

Conclusion

RLAMA's Snowflake wizard makes it incredibly easy to create powerful RAG systems powered by Snowflake Cortex. By automating the complex setup process and offering cost-effective hybrid architectures, RLAMA democratizes access to enterprise-grade AI capabilities.

Try it today and see how quickly you can go from raw documents to an intelligent question-answering system!